rgiu70

Forum Replies Created

-

rgiu70

MemberOctober 24, 2024 at 1:21 pm in reply to: How to use scan to obtain cumulative value of a functionthank you very much for your time.

Like this is what i need:

v:{[x;f;l;h] c:distinct x,f;c where not c within (l;h)};

For example, here (from the photos uploaded), the levels (above 3000 volumes, previously filtered) are no longer reached for some time, so they must remain in the cumulative list of naked levels. The first time the price crosses these levels, they should be removed from the cumulative list.

Why do I want to identify these levels? Because I want to “trigger” potential reversal signals only if they occur within a range (with a margin) around these naked levels.

Thank you again for your guidance and your time.

Giulio

-

rgiu70

MemberOctober 23, 2024 at 4:20 pm in reply to: How to use scan to obtain cumulative value of a functionThank you for your response. My problem is that in the case of 2024.01.04, the low is below the levels 1.09385, 1.0939, 1.09395, so the cumulative list should remove those values from the 2024.01.05, according to the function’s logic. However, if those levels were not crossed upwards or downwards, they should be maintained. This is what I’m not able to achieve.

To recap:

The column of cumulative values must accumulate the list of levels, but when high or low exceeds a certain level, it must be removed from the list. If a level generated on the first trading day is never touched, then it must remain in the ‘cumulative’ list until it is touched.

-

rgiu70

MemberAugust 27, 2024 at 10:21 am in reply to: How to deserialize a Kafka topic message with kfk.q -

rgiu70

MemberAugust 27, 2024 at 10:16 am in reply to: How to deserialize a Kafka topic message with kfk.qThank you very much for your response.

I have tried to adapt the helper to the model I am using, and it seems to be working…

The problem I am encountering is that I still cannot populate the hdb table with the incoming data from the Kafka topic (which should now be correctly deserialized).

Could you please take a look and let me know if you notice any errors?

Thank you very much for your patience….

schema – (sym.q)

trade:([]time:

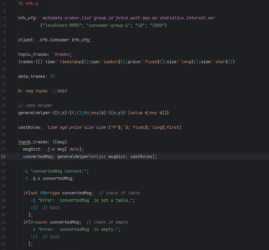

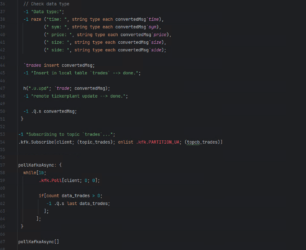

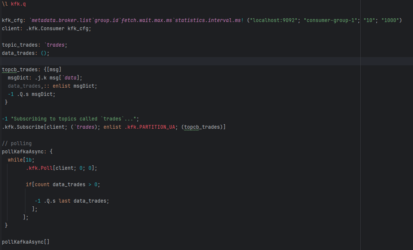

timestamp$();sym:symbol$();price:float$();size:long$();side:char$())<br></pre><p><br></p><p>consumer.q</p><p><br></p><pre>\l kfk.q<p>kfk_cfg: <i>metadata.broker.listgroup.idfetch.wait.max.msstatistics.interval.ms</i>!<br> ("localhost:9092"; "consumer-group-1"; "10"; "1000")</p><p>client: .kfk.Consumer kfk_cfg;</p><p>topic_trades: <i>trades;

trades:([] time:timestamp$();sym:<span style="background-color: var(--bb-content-alternate-background-color); font-size: 1rem; color: var(--bb-body-text-color);">symbol$();price:float$();size:long$();side:char$())</span></p><p>data_trades: ()<br><br>h: neg hopen <i>::5010

// Json Helper

generalHelper:{[t;d]![t;();0b;key[d]!{(x;y)}'[value d;key d]]}castRules:

timesympricesizeside</i>!("P"$;$;float$;long$;first)topcb_trades: {[msg]

msgDict: .j.k msg[data</i>];<br> convertedMsg: generalHelper[enlist msgDict; castRules];<br> <br> -1 "convertedMsg Content:";<br> -1 .Q.s convertedMsg;<br> <br> if[not 98h=type convertedMsg; // check if table<br> -1 "Error:convertedMsgis not a table.";<br> :() // Exit<br> ];<br> if[0=count convertedMsg; // check if empty<br> -1 "Error:convertedMsgis empty.";<br> :() // Exit<br> ];<br> <br> // Check data type<br> -1 "Data type:";<br> -1 raze ("time: ", string type each convertedMsg<i>time),

(" sym: ", string type each convertedMsgsym</i>),<br> (" price: ", string type each convertedMsg<i>price),

(" size: ", string type each convertedMsgsize</i>),<br> (" side: ", string type each convertedMsg<i>side);

trades </i>insert convertedMsg;<br> -1 "Insert in local tabletrades--> done.";<br> <br> h(".u.upd"; <i>trade; convertedMsg);

-1 "remote tickerplant update --> done.";

-1 .Q.s convertedMsg;

}-1 "Subscribing to topic

trades...";

.kfk.Subscribe[client; (topic_trades); enlist .kfk.PARTITION_UA; (topcb_trades)]

pollKafkaAsync: {

while[1b;

.kfk.Poll[client; 0; 0];

if[count data_trades > 0;

-1 .Q.s last data_trades;

];

];

}pollKafkaAsync[]

rdb.q

-

rgiu70

MemberAugust 25, 2024 at 10:54 am in reply to: How to deserialize a Kafka topic message with kfk.q -

So it is necessary to:

- Map the cumulative (high – low)

- If the cumulative (high – low) is greater than the set range, then a new bar is generated, independent of time

- When a new bar is generated, the high – low “counter” must reset and start from 0

Unfortunately, I can’t recreate this logic with scan. In the example I shared, it is very rudimentary but it works. That is, every time the range exceeds the set value, an index number is incremented by 1 (new bar index).

-

-

Thank you for the response.

Specifically, I would like to create bars from a tick-by-tick dataset, where each bar has a specific range (for example, 10 pips). So, every time the max-min range is equal to 10, the “counter” should reset and start counting max-min again. Every time the range is greater than 10, a new OHLC bar is generated.